Language

The language is a function that returns a Language object.

The object must contain 3 required properties, id, name and grammar,

and accepts 2 more optional properties.

| Property | Description |

|---|---|

id | The unique language ID |

name | The language name |

alias | Optional. Define aliases that must be also unique among all IDs and other aliases |

source | Optional. Define source patterns |

grammar | A Grammar object that is a collection of tokenizers |

The alias is an optional property that defines aliases of the language, such as a "js" shorthand for JavaScript.

The id and arias must be unique among all IDs and aliases.

The grammar object must have at least one property named main which is an array of tokenizers:

export function myLang() {

return {

id: 'mylang',

name: 'My Language',

// alias: [ 'mine' ],

// source: {},

grammar: {

main: [],

},

};

}

This is the minimal definition of the language. Let's register and test it:

import { myLang } from './myLang';

RyuseiLight.register( myLang() );

const ryuseilight = new RyuseiLight();

const html = ryuseilight.html( `console.log( 'hi!' )`, { langauge: 'myLang' } );

console.log( html );

This works well, but all code tokenized as a text (which is a default category).

Now we have to define the grammar by providing some tokenizers.

Main Tokenizers

Each tokenizer is defined by using a regular expression. There are a few things to keep in mind before start:

- A sticky flag (

y) is automatically added for better performance. - When you use a

dotAllflag (s), make sure your transpiler transforms it to[\s\S], since it is not supported by IE. - Do not use a lookbehind assertion because it doesn't work on IE (a lookahead assertion is supported).

Here is a simple example to tokenize single line comments (// comment) and strings ('string'):

{

main: [

[ 'comment', /\/\*.*?\*\//s ],

[ 'string', /'(?:\\'|.)*?'/ ],

],

}

The first parameter of the tokenizer is a category that the matched string will be categorized into,

and the second parameter is a RegExp object to search a token.

Even if the string contains //, such as ' // trap! ', this grammar works properly

because the RyuseiLight searches tokens from the head of code

and therefore the 'string' tokenizer matches it before the 'comment' does.

By adding regexes like this, you can simply define your own language.

Sub Tokenizers

The main purpose of sub tokenizers to apply another set of tokenizers to the matched string. For example, when tokenizing HTML tags, it makes more sense to extract tags first and then tokenize attributes that belong to them, rather than doing that at the same time.

To do this, use # notation that indicates the ID of sub tokenizers like this:

{

main: [

[ '#tag', /<.*?>/s ],

],

tag: [

...

],

}

The matched string by /<.*?>/s will be tokenized a set of tag tokenizers.

As you may have already noticed, sub tokenizers can also use other tokenizers:

{

main: [

[ '#tag', /<.*?>/s ],

],

tag: [

[ '#attr', /\s+.+(?=[\s/>])/s ],

[ 'tag', /[^\s/<>"'=]+/ ],

[ 'bracket', /[<>]/ ],

[ 'symbol', /[/]/ ],

],

attr: [

...

],

}

This system lets you break down a complex string into small fragments that are easy to handle.

Including Tokenizers

Tokenizers can include other tokenizers to share by using # notation without providing a regex:

{

common: [

[ 'space', /[ \t]+/ ],

[ 'symbol', /[:;.,]/ ],

],

tokenizers1: [

[ 'comment', /\/\*.*?\*\//s ],

[ '#common' ],

],

tokenizers2: [

[ '#common' ],

[ 'string', /'(?:\\'|.)*?'/ ],

],

}

This is totally same with this grammar:

{

tokenizers1: [

[ 'comment', /\/\*.*?\*\//s ],

[ 'space', /[ \t]+/ ],

[ 'symbol', /[:;.,]/ ],

],

tokenizers2: [

[ 'space', /[ \t]+/ ],

[ 'symbol', /[:;.,]/ ],

[ 'string', /'(?:\\'|.)*?'/ ],

],

}

Using Other Languages

For example, HTML can contain JavaScript inside a <script> tag.

In such cases, you can use other languages by the use property and @ notation:

import { languages } from '@ryusei/light';

const { javascript, css } = languages;

export function myLang() {

return {

id : 'mylang',

name: 'My Language',

use: {

javascript: javascript(),

css : css(),

},

grammar: {

main: [

[ '#script', /<script.*?>.*?<\/script>/s ],

[ '#style', /<style.*?>.*?<\/style>/s ],

],

script: [

...

[ '@javascript', /.+(?=<\/script>)/s ]

],

style: [

...

[ '@css', /.+(?=<\/style>)/s ],

],

},

};

}

Source

Sometimes same regex patterns appear in different tokenizers.

You can reuse such frequent patterns by defining the source property and % notation.

{

source: {

func: /[_$a-z\xA0-\uFFFF][_$a-z0-9\xA0-\uFFFF]*/;

},

grammar: {

main: [

...

[ 'function', /%func(?=\s*\()/i ],

[ 'keyword', /\b((get|set)(?=\s+%func))/i ],

],

}

}

The %[ key ] will be replaced with the corresponding source[ key ].

Note that you can not use flags in source patterns.

Actions

For a regex based tokenizer, nested syntax is often very difficult to tokenize (drives me crazy 😖), such as a template literal in JavaScript.

const string = `container \` ${

isMobile()

// ${ I am a comment }

// `I am a fake template literal`

? 'is-mobile'

: `container--${ page.isFront() ? 'front' : 'page' }`

}`;

The template literal above begins at the first line and ends at the last line. How should we define a regex that matches the open/close backticks?

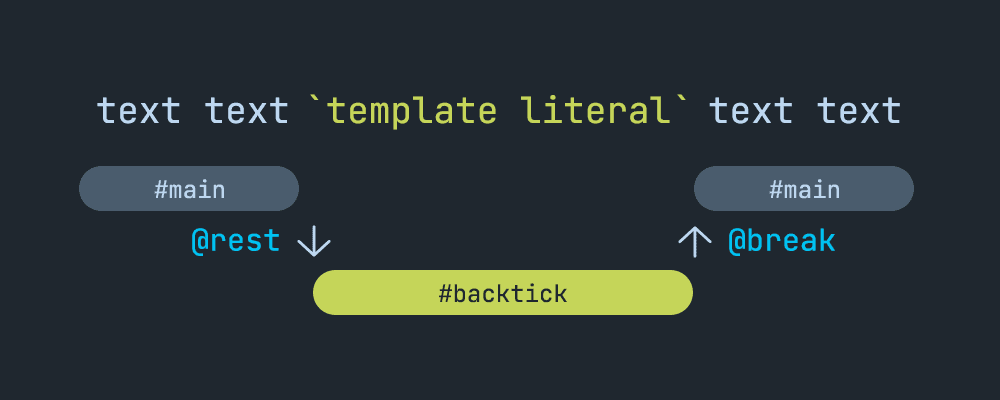

@rest and @break

...okay, giving up tokenizing it only by regex approach,

let's utilize a simple state system with @rest and @break actions

(the idea is inspired by Monarch).

{

main: [

// Imagine here are some tokenizers for JavaScript syntax.

[ '#backtick', /`/, '@rest' ],

],

backtick: [

[ 'string', /^`/ ],

],

}

Although the # notation explained before applies sub tokenizers to the matched string,

the target is changed to the rest of the whole string if the @rest action is specified as the third parameter.

You can think the @rest action switches the Lexer state (from main to backtick in this example).

In the backtick state, we have to settle the matched open backtick as a string first,

otherwise, we may accidentally dive into a terrible infinite loop.

Next, search a close backtick:

{

main: [

[ '#backtick', /`/, '@rest' ],

],

backtick: [

[ 'string', /^`/ ],

[ 'string', /`/, '@break' ],

],

}

The @break action makes the state return to the previous one.

The following picture illustrates how this grammar changes tokenization states.

Once the state transitions to #backtick, only the rules in the #backtick will be applied:

A template literal may contain embedded expressions that enclosed with ${ and }.

They can be pulled out in the same way:

{

main: [

[ '#backtick', /`/, '@rest' ],

],

backtick: [

[ 'string', /`/ ],

[ '#expression', /\${/, '@rest' ],

[ 'string', /`/, '@break' ],

],

expression: [

[ 'bracket', /^\${/ ],

[ 'bracket', /}/, '@break' ],

],

}

Additionally, a template literal can also appear in an expression, such as `outer ${ `inner` }`.

If we encounter another backtick in the expression state, change the state to the #backtick again:

{

expression: [

[ 'bracket', /^\${/ ],

[ '#backtick', /`/, '@rest' ],

[ 'bracket', /}/, '@break' ],

],

}

So far, we've defined basic states for a template literal.

Then, we have to tokenize a text inside backticks as string.

In this process, it is important to think how to stop the search position of the Lexer before ` and ${.

The regex can be written like /[^`$]+/ by using the negation operator. It is almost enough, but has 2 flaws:

- Missing

$that doesn't lead{ - Missing an escaped backtick and

${

To fix these problems, we need to add patterns to include them:

{

backtick: [

[ 'string', /`/ ],

[ 'string', /(\$[^{]|\\[$`]|[^`$])+/ ],

[ '#expression', /\${/, '@rest' ],

[ 'string', /`/, '@break' ],

],

}

Finally, let's tokenize a text inside an expression where any JavaScript syntax may appear.

This can be simply achieved by including #main:

{

expression: [

[ 'bracket', /^\${/ ],

[ '#backtick', /`/, '@rest' ],

[ 'bracket', /}/, '@break' ],

[ '#main' ],

],

}

Since the main tokenizers contain '#backtick', we should remove it:

{

expression: [

[ 'bracket', /^\${/ ],

[ 'bracket', /}/, '@break' ],

[ '#main' ],

],

}

That's all! ✨

You may wonder what if the } appears in comments or strings,

which can break the state unexpectedly.

Right, this does not work well in such cases,

but the #main is expected to include comment and string tokenizers for practical use.

Before [ 'bracket', /}/, '@break' ] matches '{', it will be tokenized by the string tokenizer:

{

main: [

[ 'comment', /\/\*.*?\*\//s ],

[ 'string', /'(?:\\'|.)*?'/ ],

...

[ '#backtick', /`/, '@rest' ],

],

backtick: [

[ 'string', /`/ ],

[ 'string', /(\$[^{]|\\[$`]|[^`$])+/ ],

[ '#expression', /\${/, '@rest' ],

[ 'string', /`/, '@break' ],

],

expression: [

[ 'bracket', /^\${/ ],

[ 'bracket', /}/, '@break' ],

[ '#main' ],

],

}

You can see my JavaScript tokenizers here.

@back

The @back action also breaks a state, but a matched string is not tokenized.

{

// "}" is tokenized as a "bracket":

tokenizers: [

[ 'bracket', /}/, '@break' ],

],

// "}" is not tokenized:

tokenizers: [

[ '', /}/, '@back' ],

],

}

This is useful when a previous state also need to end with the same character.

@debug

If you want to know what your tokenizer matches, set the @debug special action to the forth parameter (not the third!).

This action outputs the matched result as a log on your browser console.

[ 'bracket', /{/, '@rest', '@debug' ]

Do not forget removing it for the production build.

Categories

Each token is expected to be classified into one of following categories that will be highlighted by CSS:

- keyword

- constant

- comment

- tag

- selector

- atrule

- attr

- prop

- value

- variable

- entity

- cdata

- prolog

- identifier

- string

- number

- boolean

- function

- class

- decorator

- regexp

- operator

- bracket

- delimiter

- symbol

- space

- text

All categories are defined as constants.